Tableau Veers from the Path

March 13th, 2013I’ve seen it happen many times, but it never ceases to sadden me. An organization starts off with a clear vision and an impervious commitment to excellence, but as it grows, the vision blurs and excellence gets diluted through a series of compromises. Software companies are often founded by a few people with a great idea, and their beginnings are magical. They shine as beacons, lighting the way, but as they grow, what was once clear becomes clouded, what was once firm becomes flaccid, and what was once promising becomes just one more example of business as usual. The prominent business intelligence (BI) software companies of today have become too big to easily change course in necessary ways and too focused on quick wins to ever make the sacrifices that would be needed to do so. Once upon a time, however, these companies were vibrant, filled with the exuberance and promise of youth. As they grew, however, it became harder and harder to maintain their original vision. It’s easy for a few people to share an inspiring vision, but it is difficult for that vision to remain pure when the organization grows to 50, 100, 1,000, or more people. The demands of payroll and release schedules make it easier and easier to justify compromises and to chase near-sighted wins. Add to these challenges the demands of taking a company public and the alchemy seldom produces gold.

What does this have to do with Tableau? I believe that this wonderful company, which I have uniquely appreciated and respected, is losing the clear vision of its youth. Even though Tableau distinguished itself by a courageous commitment to best practices, which I believe is why it has done so well, it now seems to be competing with the big guys by joining in their folly. Tableau seems to have forsaken the road less travelled of “elegance through simplicity” for the well-trodden super-highway of “more and sexier is better.”

Tableau has a special place in my heart. Not long after starting Perceptual Edge, I discovered Tableau in its original release and wrote the first independent review of Tableau 1. I was thrilled, for in Tableau I found a BI software company that shared my vision of visual data exploration and analysis done well. Since then I’ve used Tableau, along with Spotfire, Panopticon, and SAS JMP, to illustrate good data visualization functionality in my courses and lectures. Until recently, I assumed that Tableau, of all these vendors, would be the one mostly likely to continue its tenacious commitment to best practices. However, what I’ve seen in Tableau 8, due to be released soon, has broken my heart. Tableau is now introducing visualizations that are analytically impoverished. Tableau’s vision has become blurred.

I recently received an email promoting the merits of Tableau 8. It included a link to more information, and when I clicked on it, this is what I read:

“Crave More Bling?” I couldn’t believe my eyes. Could I have clicked on a link to SAP Business Objects by mistake? This is not the Tableau that I know and respect. As it turns out, someone in the Tableau’s Marketing Department thought twice about the term “bling” and removed it before my screams reached Seattle, but in truth, whoever called this bling was just being honest; some of the items in this list of new visualizations are nothing but fluff.

I won’t write a full review of Tableau 8 here. Despite the problems that I’m focusing on, this version of the software includes many worthwhile and well-designed features. For the time being, it will remain one of the best visual data exploration and analysis tools on the market, but I’m concerned that its current direction does not bode well for Tableau’s future. To express my concern, I’ll focus primarily on three new visualizations that are being added in Tableau 8 and why, in two cases, they should have never been added and, in one case, how its design fails in a fundamental way.

Word Clouds

Back in 2008 my friend Marti Hearst, who teaches information visualization and search technologies at U.C. Berkeley, wrote a guest article for my newsletter about word clouds. In the article, Marti described some of the fundamental flaws of word clouds, which she referred to in the article as tag clouds, because these visualizations were always based on HTML tags at the time.

I was confused about tag clouds in part because they are clearly problematic from a perceptual cognition point of view. For one thing, there is no visual flow to the layout. Graphic designers, as well as painters of landscapes, know that a good visual design guides the eye through the work, providing an intuitive starting point and visual cues that gently suggest a visual path.

By contrast, with tag clouds, the eye zigs and zags across the view, coming to rest on a large tag, flitting away again in an erratic direction until it finds another large tag, with perhaps a quick glance at a medium-sized tag along the way. Small tags are little more than annoying speed bumps along the path.

In most visualizations, physical proximity is an important visual cue to indicate meaningful relationships. But in a tag cloud, tags that are semantically similar do not necessarily occur near one another, because the tags are organized in alphabetical order. Furthermore, if the paragraph is resized, then the locations of tags re-arrange. If tag A was above B initially, after resizing, they might end up on the same line but far apart.

Tag clouds also make it difficult to see which topics appear in a set of tags. For example, in the image below, it’s hard to see which operating systems are talked about versus which ones are omitted. Intuitively, to me, it seemed that an ordinary word list would be better for getting the gist of a set of tags because it would be more readable.

Since Marti wrote this article, what was once reserved for HTML tags has become a popular way to display words from many contexts, such as books and speeches. Here’s a word cloud that Tableau is currently featuring on its website to showcase this new addition to Tableau 8:

What this tells me is that the candidates said the following words quite a bit: “people,” “going,” “governor,” “president,” “government,” “we’ve,” “make,” “more,” along with a few others that are legible. These individual words without context are not very enlightening. “More” what? “Going” where?

Filters have been added to this word cloud for selecting words spoken by Obama, Romney, or both candidates and for removing words that were spoken outside a specified number of instances. Combining a word cloud with filters gives it an appearance of analytical usefulness, but the appearance is deceiving. A word cloud is as useful for data analysis and presentation as a cheap umbrella is for staying dry in a hurricane. Assuming that an analysis of these words in isolation from their context is useful, a horizontal bar graph would have displayed them far better. Bars would provide what the word cloud cannot, a relative representation of the values in a way that our brains can perceive. Words differ in length, so in a word cloud a long word that was spoken 100 times would appear much more salient than a short word that appeared the same number of times. You might wonder, “What if there are too many words for a horizontal bar graph?” In that case, another one of Tableau’s new visualizations—a treemap—could handle the job more effectively. More about treemaps later.

Word clouds are fun, but they lack analytical merit. When did Tableau, which was originally developed for visual analysis, become a tool for creating impoverished infographics? Did they add this feature to satisfy one of their prominent UK customers, the Guardian? Whatever the reason, with the addition of word clouds, how many of Tableau’s customers will waste their time trying to analyze data using this ineffective form of display?

Packed Bubbles

Bubbles have their place in the lexicon of visual language, but only when encoding values using the sizes of circles is the best choice available because the most effective means—2-D position (e.g., data points along a line in a line graph) and length (e.g., bars in a bar graph)—cannot be used. This is the case when we display quantitative values on a map, because 2-D position is already being used to represent geographical location and bars cannot be aligned for easy comparison because they cannot share a common baseline when they’re geographically positioned. Bubbles are also useful when, in a scatter plot, which uses horizontal position to represent one variable and vertical position to represent another, we also want to make rough comparisons among the values of a third variable in the form of a bubble plot. Using bubbles by themselves, however, is never the best way to display values, but this is what you’ll soon be able to do with Tableau 8. Here’s a simple example that displays sales per country:

How are sales in South Africa? Yes, it’s there in the list; it just isn’t labeled. We can solve this omission by forcing all of the labels to appear, as follows:

Now, can you find South Africa? Given enough time, you can spot it at the top. What is the value of sales in South Africa? Nothing about the bubble reveals this, but we can hover over the bubble to access its value. We could do this for all the bubbles if we don’t mind taking forever to see what a bar graph would reveal to an approximate degree automatically.

How much greater are sales in the United States than Argentina? Come on, give it a try. Finding it difficult? Try it now using the bar graph below.

We can now see that sales in the U.S. were approximately nine times greater than sales in Argentina.

What if there are too many values to display in a bar graph without having to scroll? Let’s look at larger set of data—sales to 3,356 customers—first using bubbles.

Cool! Assuming they’re all there, that’s a lot of bubbles. What do these bubbles tell us? Some customers buy a lot and some buy little and a bunch buy amounts in between. Anything else? No, that’s pretty much it. And why is only the one bubble that represents Jim Hunt labeled? As we’ll see in a moment, it isn’t the largest.

I can put 3,356 horizontal bars on the screen at once, but they will appear as thin, indistinguishable lines. Also, there won’t be any room for the customer names, but those names don’t appear in the bubble display either, so that’s not a problem. Let’s see how it looks.

This isn’t ideal by any means, but it provides a more informative overview than the bubbles. For example, we can easily see that approximately 10% of the customers made purchases totaling more than $35,000, approximately 70% of them made purchases totaling less than $5,000, and approximately half of them made purchases totaling more than $2,500. We could continue citing similar observations. If we wanted to see and compare individual customers, a sorted, scrolling version of a normal bar graph like the one below would often work when comparing similar values, and we could filter the data to compare specific customers that don’t simultaneously appear on the screen.

When I first learned that packed bubbles would be included in Tableau 8, I immediately sent an email to Chris Stolte, the Chief Development Officer, Pat Hanrahan, the CTO, and Jock Mackinlay, the Director of Visual Analytics. These guys are all respected leaders in the field of information visualization and friends of mine. “Why are you doing this?” I asked with an only slightly veiled sense of disgust.

Chris provided the answers, which I’m sure all make sense to him, but don’t make sense to me. Our perspectives are different. Chris lives and breathes Tableau almost every minute of his life. To the same degree, I live and breathe data visualization, independent of particular products. I believe that a feature should only be added to software that meets the following requirement: it is the best way to do something that really matters. I have the sense that Chris uses a different yardstick. He believes that packed bubbles add value, which he explained as follows:

There were two driving reasons for us building Bubble Charts into the product:

- Incremental construction of views. The first was that we want to create an application where you can incrementally build up visualizations of your data and easily change from one view to another. This is the journey we have been on since the day we wrote the first line of code for Tableau. The vision is that you should be able to easily explore the space of possible visualization of your data to find just the right view to answer your question and to tell the stories in your data. As you place your data on the canvas, you should always be creating useful visual presentations of your data and getting immediate and useful feedback. We have done this well but there have always been places in the flow where users create views that feel broken or that don’t present the user’s data in a natural way. To really help people feel comfortable, I passionately feel that we need to invest in making sure every step is a reasonable view of their data to create a feeling of “safe exploration”. Supporting this flow is what drove us to invest a lot in “best practice defaults”. It is also what led to introducing Bubble Charts (and other charts) in v8.

- Visualization of networks, relationships, and paths. We want to extend Tableau to include visual presentations of data that support answering questions about networks, relationships, and paths. This includes node-link diagrams, adjacency matrices, Sankey diagrams, etc. This introduces the need for additional encodings and visualizations—and flows.

Here’s how I responded to Chris:

- Incremental construction of views. A visualization that represents data in a way that doesn’t support a better understanding should not be in the flow. Packed bubbles fall into this category. The user should always be directed to different views that are useful and optimally informative. Sticking an ineffective visualization between two effective visualizations, which does nothing but serve as a transition between them, adds no value. It will waste time. More concerning is the fact that people will not use packed bubbles as a mere transition but as a visualization that has value in and of itself. Even if you could demonstrate a case when packed bubbles were actually useful, which I did not find, the value that they add would still need to be significantly better than the harm that they will cause if you support them.

- Visualization of networks, relationships, and paths. This is not a justification for packed bubbles. What you must do architecturally to support network displays, etc. is not a valid argument for exposing people to the steps that you took to get there. In the past, you have exposed people to unnecessary requirements that got in their way merely because of architectural constraints. For example, forcing people to use two quantitative scales to combine two types of marks in a single chart (e.g., bars and lines) was unnecessary and harmful to the interface and the user experience.

When I visited Tableau’s website to find an example of packed bubbles, this is what I found:

A small set of bubbles has been combined with a map and two bar graphs. Do the bubbles provide an effective way to display the causes of fires? Hardly. Obviously, a bar graph would handle the task effectively, but these bubbles cannot. Tableau is not only enabling people to display data in this ineffective way, but by showcasing this example they are encouraging the practice. Damn it, when did Tableau decide to nudge people in useless and potentially harmful directions?

The Marketing Department is even featuring bubbles as the new face of Tableau, as you can see below:

Maybe this is what it took to impress Gartner enough to elevate Tableau to the leaders’ section of the BI Magic Quadrant. What will it take for Gartner to grant them a more prominent position than Microsoft—spinning 3-D pie charts that sing? Once a software company starts down the path of adding silly features to a product, it becomes nearly impossible to remove them. Just ask the folks responsible for the charting features of Excel.

Treemaps

Many months ago, when I first learned that Tableau would be adding treemaps, I welcomed the news, but cautioned them to implement them well and to only nudge people to use them when appropriate. Ben Shneiderman created treemaps to display large numbers of values that exceed the number that could be displayed more simply and effectively using a bar graph. Here’s an example that appears in my book Now You See It that was developed using Panopticon:

What we see here is the stock market. Each small rectangle is an individual stock. The larger rectangles group stocks into sectors of the market (financial, healthcare, etc.). Imagine that the size of each rectangle represents the price of the stock today and the color represents its change in price since yesterday (blues for gains and reds for losses). If it could be done, I’d rather view this information in a bar graph, but if I need an overview that includes all of these stocks, there are far too many values to put in front of my eyes at once using bars. A treemap is called a space-filling display, because it takes full advantage of the available space. A treemap displays parts of a whole and does so in a way that handles hierarchies. In this example, stocks are displayed as a three-level hierarchy: the entire market (level 1), individual sectors of the market (level 2), and individual stocks (level 3). Rectangles within rectangles are used to separate the groups.

A treemap belongs among Tableau’s visualizations, but I expected the implementation to be more thorough and reserved for large sets of values. If we look for examples on Tableau’s website, here’s one that we find:

This is a relatively small set of values. Could they be better displayed using a different graph? You bet. A simple bar graph would do the job. Good data visualization software should never encourage us to display small sets of data as a treemap—never! Here’s another example that’s featured on Tableau’s website:

Imagine how much better this would work using two horizontal bar graphs, side-by-side, with the items in each sorted in the same order.

Besides the fact that we’re being encouraged to use treemaps when they aren’t appropriate, Tableau’s treemap itself suffers from a fundamental flaw: it cannot effectively display values in groups. I’ll illustrate. Imagine that we want to compare sales and profits per customer, so we begin with the following treemap.

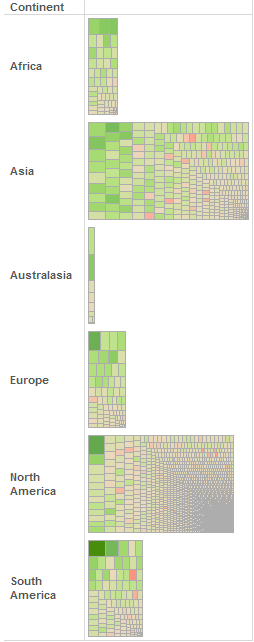

The rectangles sizes represent sales and their colors represent profits (green for profits and red for losses, which you can see assuming you’re not color blind). This is a decent start, except for the fact that the labeling seems entirely arbitrary. Let’s continue by breaking customers into continents to show a hierarchy (customers by region), which treemaps were designed to support. When I double-clicked the Continent field, this is what I saw:

Hold on…what just happened? When I added continents, rather than organizing the customers into their respective regions within a single treemap, the visualization automatically switched to separate treemaps of a sort displayed within bars, but little that is useful can be done with this view. The individual rectangles for customers are too small and anonymous without labels to be of much use. Unlike packed bubbles, however, I can imagine occasions when these treemap bars could be enlightening, but I’m concerned that they’ll mostly get used when other forms of display would work better.

When I questioned the folks at Tableau about this, I was told that groups could be formed within a single treemap by dropping a categorical field of data on either the Details or Labels attributes in what Tableau calls the Mark’s card, but I did this and the treemap remained unchanged. After further correspondence, I learned that in addition to dropping the categorical field onto the Mark’s card, you must make sure that it appears in the list prior to other fields that are lower in the hierarchy. By placing Continent on the list before Customer Name, the treemap was rearranged as follows:

This is a step in the right direction, even though something is definitely amiss with the labeling, but it isn’t the view that I expected and wanted. As you can see, the continents are not clearly delineated. To delineate groups clearly, we must relinquish the quantitative value represented by variations in colors (in this case profit) so that discrete colors can be used to represent the categorical groups (in this case continent), resulting in this view:

When I asked Jock about this lack of standard treemap functionality, he replied:

We focused in Tableau 8 on the mapping of data fields to graphic properties rather than the formatting techniques for showing nesting found is some treemap implementations such as border width, spacing, “cushioned” rendering, etc.

I’ve seen many implementations of treemaps, but this is the first one I’ve seen that doesn’t allow values to be easily and clearly grouped.

I’m concerned that Tableau is becoming more and more like other software vendors that prioritize product release schedules over quality. This isn’t the only sign of this that I’ve seen. When brushing and linking (coordinated highlighting) was introduced, it couldn’t support proportional brushing, which in my opinion is essential. It still cannot. When extensive formatting capabilities were introduced, the initial interface was designed as a complicated panel with pages and pages of options, much like an old-fashioned dialog box. I can never remember where the option that I need. They realized that the interface sucked before it was released, but there wasn’t enough time to fix it, and it remains to this day. When they introduced a way to combine different types of marks (bars, lines, data points, etc.) in a single chart, it required a second quantitative scale and axis, even though it is rarely needed. Why? Because this was the easiest way to deliver the functionality based on the underlying architecture of the software. Convenience of development team trumped our needs as users. This approach involves unnecessary steps and a confusing interface, but it has not been fixed.

For years I’ve been encouraging my friends at Tableau to add a box plot to its library of visualizations—a form of display that is fundamental to a data analyst’s needs—but it has never risen high enough in the list, while word clouds and packed bubbles, two useless forms of display, have now been added. Obviously, the guys at Tableau have a different perspective and set of priorities than I do. I believe that mine correlates more closely with the needs of data analysts.

The Story of Show Me

In an early version of Tableau, a great little feature called Show Me was introduced. Show Me remains always available with a list of chart types to assist us in selecting appropriate forms of display. Based on the data we’ve selected, Show Me recommends charts that might be useful.

I have always loved Show Me, but my love for it has waned as it’s gradually grown over-complicated and lost site of its purpose. Rather than suggesting charts that are useful, somewhere along the line Show Me began instead to suggest chart types that are possible. In the beginning, Show Me was simple and elegant, but this is no longer the case. How Show Me has changed represents in microcosm the larger problems that I’ve observed in Tableau as a whole. It is difficult to add features to a product without over-complicating the interface. Good interface design isn’t easy, but it’s necessary.

Below on the right is how Show Me will look in Tableau 8:

It includes 23 types of charts from which to choose. In order, starting at the upper left, the list includes the following:

- Text table

- Symbol map

- Filled map

- Heat map

- Highlight table

- Treemap

- Horizontal bar

- Stacked bar

- Side-by-side bars

- Lines (continuous)

- Lines (discrete)

- Dual lines

- Area chart (continuous)

- Area chart (discrete)

- Pie chart

- Scatter plot

- Circle view

- Side-by-side circles

- Dual combination

- Bullet graph

- Gantt

- Packed bubbles

- Histogram

Show Me has grown to include far too many choices, which undermines its ability to guide us. It has become bloated, awkward, and confusing. I believe that the following shorter list of chart types would serve its purpose more effectively:

- Text table

- Bar: When selected, either a vertical or horizontal bar could be automatically selected based on factors such as the length of labels and the number of values. Regular bars could be easily transformed into stacked bar by dropping a categorical field of data onto the color attribute, so there’s no reason to include stacked bars as a separate choice in Show Me.

- Line: Only one type of line graph needs to appear in Show Me: the type that displays a series of values continuously, without breaks in the line. On rare occasions when what Show Me calls a discrete line—one that has breaks in it—is useful, this could be made available as a simple formatting option. What Show Me calls dual lines is merely a line graph with two quantitative scales. This also could be handled as a simple formatting option, not just for line graphs, but for bar graphs and area charts as well.

- Area chart: Similar to a line graph above, only one kind of area chart is needed in Show Me: the one that displays values continuously, without breaks.

- Dot plot: This is the conventional name for what Show Me currently calls a circle view and side-by-side circles.

- Scatter plot: (Dropping a quantitative field of data on the size attribute turns a scatter plot into a bubble plot.)

- Histogram (In appearance, this is a bar graph, but it possesses special functionality that is needed to display frequency distributions, and as such it deserves to be on the list.

- Box plot: A visual analysis product without a box plot is missing something essential.

- Geographical map: Symbols would serve as the default mark, but color fills would be readily available as an option.

- Heat map: This is a heat map arranged as a matrix of columns and rows. What is currently named a heat map in Show Me is misnamed, for it uses symbols that primarily vary by size, not color. This functionality is already readily available whenever symbols such as circles or squares are being used, which is the default when a dot plot is selected, by dropping a categorical variable on the size attribute.

- Treemap

- Gantt chart

This list reduces our choices from 23 to 12. Not bad. Each item on the list actually qualifies as a different type of chart; none are mere variations of another chart on the list with different formatting. Navigating these choices would be so much simpler.

Notice that I left out the pie chart. When the guys at Tableau first added the pie chart to the product, they explained to me that they were doing so only because pie charts are sometimes useful on geographical maps to divide bubbles into parts of a whole. For this sole purpose, there is no reason to include the pie chart in Show Me. Bubbles on maps can easily be subdivided like pies into parts by dropping a categorical field of data onto the color attribute. By including the pie chart in Show Me, people are encouraged to use it when other forms of display, usually a bar graph, would work more effectively.

Also notice that there is no chart type in my list that serves as a combination of marks (e.g., bars and lines). It shouldn’t be necessary to list separate chart types for every possible combination of marks. A good interface would allow us to select a series of values in a graph and change its mark to any type that’s appropriate.

And finally, notice that I’ve removed from the list my own invention, the bullet graph. I did this because a bullet graph can be treated as a variation of a bar graph or a dot plot, which could be easily invoked as a formatting option.

In Show Me, too many charting options are suggested as viable views, often when they’re ineffective. Here’s Show Me again:

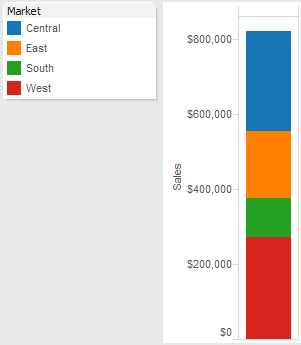

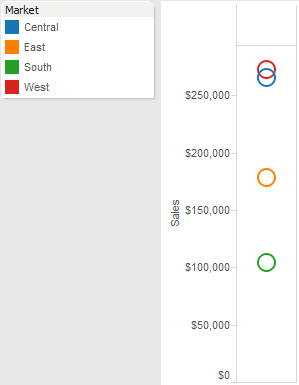

The following chart types are highlighted in Show Me because they supposedly provide useful ways to view the two fields of data that I’ve selected: Market and Sales:

- Text table

- Heat map

- Highlight table

- Treemap

- Horizontal bar

- Stacked bar

- Pie chart

- Circle views

- Packed bubbles

Let’s see which views are actually useful based on the data that I’ve selected. We’ve already seen the text table, which is definitely useful.

Here’s what Show Me is calling a heat map:

This isn’t actually a heat map, as I mentioned before, because colors are not being used to encode the values; sizes are. Rarely would we choose to display these four sales values using the sizes of squares.

Here’s a highlight table:

This is slightly more useful as a way to add a visual representation (color) to a text table, but other forms of display will usually work better.

Here’s a treemap:

It would never make sense to display a small set of values such as this as a treemap.

Here’s a horizontal bar graph:

Unless we need precise values, as provided in the text table, this is clearly the most effective way to display these values. Fortunately, this is the chart that’s featured as the best choice by Show Me.

Here’s a stacked bar graph:

Try to compare the stacked market values. To compare them easily and accurately, we would definitely want a regular bar graph. The only time this stacked bar would suffice is when we primarily want to know total sales with only a rough sense of how its divided into markets.

Here’s a pie chart:

Try comparing the slices or determining individual values. This is never going to serve as a useful view of these values.

Here’s a circle view:

Only the bar graph does a better job than this circle view (a.k.a., strip plot). Using the positions of the circles to encode their values works well for our brains.

What’s left? Oh yeah, the new packed bubbles chart. Here it is:

Thank God Tableau decided to add this chart to its Show Me recommendations. Just imagine the enlightening views this will provide! (Yes, I’m being sarcastic.)

Why Is This Happening?

What’s causing Tableau to compromise the integrity of its product? Given the circumstances, here’s a list of the possibilities that I would usually consider:

- Has their mission to go public led them to add ineffective but eye-popping sparkle to the product to entice investors?

- Has their marketing plan led them to curry favor with analysts who don’t understand analytics in general or data visualization in particular, such as Gartner and Forrester, by adding features that might appeal to them?

- Have they been tempted by the possibility of big deals with prominent companies to add senseless features to win those deals?

- Is the development organization, which has now become huge, inclined to add features merely because they are easy to implement based on the underlying architecture or to design them in hobbled ways because the best design cannot be implemented without changing the architecture?

- Have they lost touch with their roots in academic research and no longer remember how the human brain works?

- Have they gradually become like most other software organizations, limited to an engineering perspective and driven by sales, as opposed to one that is balanced by a commitment to design and inspired by opportunities to provide the best possible product?

- Have they become slaves to product release schedules even when that means that new features will be implemented poorly?

- Have they become so immersed in the product and out of touch with those who rely on it that they’ve forgotten what really matters?

These are the questions that I would ask about any vendor in these circumstances, but they aren’t questions that I want to consider when trying to understand Tableau. Although no organization is immune to losses in effectiveness as it grows, I’ve always believed that if any software company could stand firm in its vision and commitments, Tableau was that company. The truth is, I really don’t know what’s driving their decisions to compromise the integrity of the product and its usefulness to the world. Even for the folks at Tableau, I suspect that most of the reasons behind their choices are unconscious. I suspect that most of the poor design choices stem from the fact that when people become immersed in a product, their perspective becomes myopic and skewed and they can no longer see the product through the eyes of its users or the guiding lens of best practices.

What criteria should be used to guide product development? A product should never be designed merely to do what’s easy…to do what’s fun for the developer…to do what can be done in the allotted time…to do what will produce the highest revenues…or even to do what people are asking for most. There’s nothing wrong with design that is easy, fun, profitable, or any of the other benefits in this list, but these should not serve as the primary drivers of product design and development.

Here’s a simple guideline for good design: “Design the product to do what’s most useful in the best way possible.”

What’s “most useful?” The fact that customers are asking for something in particular does not in and of itself make it useful. People sometimes want things that are far from useful or they want things to be designed in ways that don’t actually work. Anything that helps us solve real-world problems is useful. Problems are not created equal, however. Solving some problems is more important than solving others. The value of a solution should be tied to its potential for making the world a better place. It not that word clouds and packed bubbles are far down the list of problem-solving features for a visual data analysis product; they aren’t on the list at all.

Great products are never those that try to please everyone and do everything. Rather, they begin with a clear, focused, and coherent vision, and they persistently do what’s necessary to express that vision as effectively as possible without detour or compromise. Based on what I’m seeing, I fear that Tableau is trying to make its product do everything one can imagine doing with data. This can’t be done. It shouldn’t be done. Making the attempt will result in a product that is complicated beyond usability. It is reasonable for Tableau to make it easy for analysts to share the results of their work with others in polished ways by providing basic data presentation functionality, but it doesn’t make sense for the existing product to become a comprehensive platform for the development of infographics. Such a tool would require functionality similar in complexity to Adobe Illustrator, very little of which would ever be needed by a data analyst. If Tableau wants to compete with products such as D3, which provides a flexible programming environment for the development of interactive, web-based infographics, it would make sense to develop a separate tool that integrates nicely with the existing product. Sometimes good design involves setting practical limits and sticking to them.

I believe that Tableau is increasingly veering from the path. It pains me to say so. It’s all right for a company’s vision to evolve, and I’m sure that Tableau’s has in meaningful ways, but what I’ve described doesn’t qualify as progress. If you’re one of Tableau’s customers and you share my opinion, I urge you to make your concerns known. Please join me in reminding the good folks at Tableau that they are better than some of the design choices that they’re currently making.

Take care,