| |

|

|

Thanks for taking the time to read my thoughts about Visual Business

Intelligence. This blog provides me (and others on occasion) with a venue for ideas and opinions

that are either too urgent to wait for a full-blown article or too

limited in length, scope, or development to require the larger venue.

For a selection of articles, white papers, and books, please visit

my library.

|

| |

November 8th, 2011

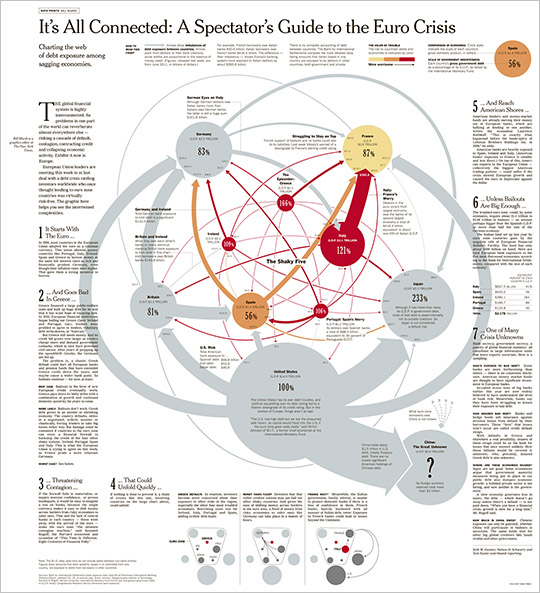

A few days ago I received an email from Thomas Watkins, who is an avid reader of this blog and a past participant in one of my Visual Business Intelligence Workshops. He asked my opinion of this infographic, which recently appeared in the New York Times.

It looks a bit like a solar system with really strange orbits. Displayed as a node-link diagram, the information seems quite complex—much more so than necessary. Rather than responding to Thomas with my opinion, I asked for his. As a highly-motivated student of data visualization, Thomas volunteered to provide more than I asked: “I started to write my thoughts, and I figured it would be better if I tried to actually attempt a redesign.” Three days later, he emailed me the following:

In the original graph by Bill Marsh of The New York Times, the first thing that jumps out at me is that it’s difficult to understand what’s even going on. I think The New York Times editing staff realized this, because they offer a fairly lengthy breakdown demystifying the visualization. This graph suffers from the classic problem of encoding quantitative values as area instead of length or 2D location. Trying to figure out which countries owe debt to other countries and the amount owed requires the reader to carefully trace arrows from one bubble to another. The graph also doesn’t even attempt to visually encode the very important metric of ‘debt to GDP ratio’; rather, they put the percentage in each bubble. Perhaps there was no more room left to graph it considering the busyness of the current design.

If the purpose of this graph is to convey the feeling of mass confusion that’s associated with the current financial crisis, then maybe it does make sense to use a wild array of bubbles and lines. However, if we want to communicate the broad picture while allowing specific visual comparisons to be made easily, then this visualization could’ve been designed more effectively. Overall I think it succeeds more as a diagram than as a graph.

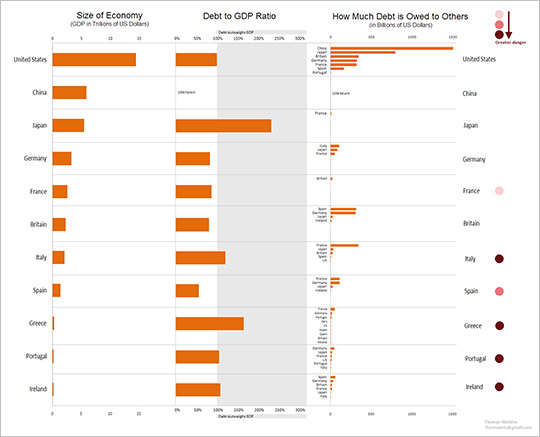

Thomas then went on to propose the following visualization as a replacement, minus the textual narrative that would be helpful to tell the story completely.

Thomas explains his version of the story as follows:

In my redesign I used horizontal bar graphs to rank each country by the size of their economy. Next I made a column for the ‘debt to GDP’ ratio. I figured that the two most important messages regarding this metric were (1) how high the ratio is, and (2) the ratio’s deviation from 100%. Therefore I made it a bar graph with a reference region. For the 3rd column I nested a set of ranked horizontal bar graphs within each country’s row. Finally, I plotted a simple dot next to each of the “worrisome” countries to indicate the ‘color of trouble’ from the original graph. My color of trouble varied on color intensity rather than hue to promote continuous rather than categorical perception.

I found Thomas’ version far superior to the original. What’s more, using Thomas’ design, additional metrics could be added to the display to enrich the story without overcomplicating it. Perhaps my friends at the New York Times should hire Thomas as an adviser.

Take care,

(Author’s note, upon further reflection: I realize now on Sunday, November 13th that I should have chosen my words more carefully in my original blog post when I described Thomas’ visualization as a “replacement” for the original network diagram that appeared in the New York Times. It is not a replacement in the sense that it intends to do what the original intended, but in the sense that it attempted to provide a more useful and effective visualization of the data; one that displays important information about the indebtedness of Euro zone countries to one another in an accessible way, which complements the story better than the original network diagram. Thomas’ visualization does not attempt to display the complex interconnectedness of debt. He created his own visualization because he found the original network diagram inaccessible and confusing. When he asked for my opinion of the original diagram, I had a similar reaction, which I have to most static node-and-link network visualizations that are designed for journalistic purposes: they look complicated and confusing, and as such do not invite readers to examine the data in useful ways. Network visualizations attempt to provide a synthesis of the many parts and relationships that make up a complex set of interconnections. They are difficult to decipher, in part because the information is itself complex, and in part because our brains cannot process a complex set of visual connections as a whole when displayed as nodes and links. When a network visualization is well designed, we can discern a few useful attributes regarding the whole—mostly outliers and a few predominant patterns. Beyond this, we must break the information down into parts and focus on them one at a time, which almost always requires the ability to interact with the visualization, such as by filtering out what’s not needed or by highlighting objects of focus in the context of the whole. I did not object to the original diagram that appeared in the New York Times because I felt that it was poorly designed, but because, in static form, it was not an effective use of static graphics to complement the story for its readers.)

October 28th, 2011

On Tuesday in this blog I expressed my frustration with VisWeek’s information visualization research awards process. I don’t want to leave you with the impression, however, that the state of information visualization research is bleak. Each year at VisWeek I find a few gems produced by thoughtful, well-trained information visualization researchers. They identified potentially worthy pursuits and did well-designed research that produced useful results. While puzzling over the criteria that the judges must have used when selecting this year’s best paper, I spent a few minutes considering the criteria that I would use were I a judge, and came up with the following list with points totaling to 100:

Effectiveness (It does what it’s supposed to do and does it well.) — 30 points

Usefulness (What it does addresses real needs in the world.) — 30 points

Breadth (Many people will find it useful.) — 10 points

Applicability (It applies to a wide range of uses.) — 10 points

Innovativeness (It does what it does in a new way.) — 10 points

Technicality (It exhibits technical excellence.) — 10 points

Given more thought, I’m sure I would revise this to some degree, but this gives you an idea of the qualities that I believe should be awarded and the importance of each.

One research paper, among a few, that thrilled me by its elegance and exceptional usefulness was presented yesterday by Michelle Borkin of Harvard University’s School of Engineering and Applied Sciences titled “Evaluations of Artery Visualizations for Heart Disease Diagnosis.”

I’ll allow the abstract that opens the paper to summarize the work:

Heart disease is the number one killer in the United States, and finding indicators of the disease at an early stage is critical for treatment and prevention. In this paper we evaluate visualization techniques that enable the diagnosis of coronary artery disease. A key physical quantity of medical interest is endothelial shear stress (ESS). Low ESS has been associated with sites of lesion formation and rapid progression of disease in the coronary arteries. Having effective visualizations of a patient’s ESS data is vital for the quick and thorough non-invasive evaluation by a cardiologist. We present a task taxonomy for hemodynamics based on a formative user study with domain experts. Based on the results of this study we developed HemoVis, an interactive visualization application for heart disease diagnosis that uses a novel 2D tree diagram representation of coronary artery trees. We present the results of a formal quantitative user study with domain experts that evaluate the effect of 2D versus 3D artery representations and of color maps on identifying regions of low ESS. We show statistically significant results demonstrating that our 2D visualizations are more accurate and efficient than 3D representations, and that a perceptually appropriate color map leads to fewer diagnostic mistakes than a rainbow color map.

What you see on the left side of the image above is the conventional rainbow-colored 3D visualization that most cardiologist’s rely on today. Although this visualization accurately represents the physical structure of coronary arteries, this is not necessarily the best view for identifying areas of low ESS. One of the problems is occlusion, which forces cardiologists to rotate the view so it can be seen from all perspectives, which is time-consuming and prone to error. The use of rainbow colors as a heat map to represent a range of quantitative values from low to high ESS is another significant problem that leads to inefficiency and error.

Michelle Borkin eloquently told the story of how she and her colleagues, an interdisciplinary team that included medical experts, worked through the process of designing, testing, and improving HemoVis. Part of the story that fascinated me was the way that they dealt with the expectations and biases of cardiologists, formed by their training and experience with existing systems. People often develop strong preferences for visualizations that perform poorly, merely because they are familiar or superficially attractive. Opening them to other possibilities can be challenging. Because the team worked so well with the cardiologists and because they did fine work with benefits that could be demonstrated, they managed to loosen the cardiologists’ hold on the familiar and open them to a solution that worked much better. When adoption of a new system results in lives being saved, this is a great success.

I don’t want to describe HemoVis in detail, because I want you to read the paper and fully appreciate the beauty of this work. I do want to mention a few features, however, that illustrate the design’s excellence. As you can see in the HemoVis screen below, the coronary arteries are arranged as a simple tree structure rather than according to their actual physical layout. There are three major branches and sub-branches off of them. To make the interior walls of the arteries entirely visible at a glance, they have been opened up and flattened, much as cardiologists sometimes “butterfly” an artery. The width of the artery representation varies in relation to the circumference of artery. A diverging color scale with gray for the low range of risk and red for the high range of risk worked dramatically better than the rainbow scale of conventional images. Just as maps of London’s metro system are easier to use by commuters when the lines are arranged differently than actual geography, this rearrangement of the coronary arteries and simplified color scale perfectly supports the task of spotting the locations of low EST. Form supporting function this effectively is a thing of beauty. Tests involving cardiologists demonstrated that HemoVis required little training and resulted in a significant increase in the number of risk areas that were identified, the elimination of false positives, and a dramatic reduction in the amount of time that was needed to complete the task. In other words, HemoVis has the potential of saving lives.

Follow this link to download the paper to see an exemplar of fine information visualization research. Several other information visualization papers that have been presented at VisWeek this year exhibit fine work as well (including all of the infovis papers submitted by Stanford), but this one in particular touched my heart. (Yes, the pun was intended.)

Take care,

October 26th, 2011

I’ve often complained that much information visualization research is poorly designed, produces visualizations that don’t work in the real world, or is squandered on things that don’t matter. To some degree this sad state of affairs is encouraged by the wayward values of many in the community. A glaring example of this is the “InfoVis Best Paper” award that was given at this year’s conference (and many in past years as well). Despite the obvious technical talent that went into developing the “Context-Preserving Visual Links” that won this year’s award, these visual links are almost entirely useless for practical purposes.

Context-preserving visual links are lines that connect items in a visualization or set of related visualizations to highlight those items and thus make them easier to find, and do so in a way that minimally occludes other information. There are many ways that items can be highlighted. The best methods apply visual attributes to those items that we perceive preattentively, causing them to pop out in the display. This approach highlights items without adding meaningless visual content to the display. As you can see in the following example of context-preserving visual links, items are highlighted by the addition of lines to connect them.

The lines are the most salient objects in the display, yet they mean nothing. Drawing someone’s attention to visual content that is meaningless undermines the effectiveness of a visualization. They direct attention where it isn’t needed. Even worse in this case, they suggest meanings that don’t actually exist by forming paths, which usually suggest meaningful routes through data. Also, lines that connect items suggest that those items are somehow related, but this is not always the case.

My intention here is not to devalue the talents of these researchers, and certainly not to discourage them, but to bemoan the fact their obvious talents were misdirected. What a shame. Why did no one recognize the dysfunctionality of the end result and warn them before all of this effort was…I won’t say wasted, because they certainly learned a great deal in the process, but rather “misapplied,” leading to a result that can’t be meaningfully applied to information visualization.

The fact that this work was given the “Best Paper” award indicates a fundamental problem in the information visualization research community: because so many in the community are focused on the creation of technology—a computer engineering task—they lose sight of the purpose of information visualization, which is to help people think more effectively about data, resulting in better understanding, better decisions, and ultimately a better world. Except in those rare instances when context-preserving visual links represent meaningful paths that connect items that are in fact related, they are useless. The fact that they connect items in a way that minimally occludes other items in the visualization is a significant technical achievement, but one that undermines use of the data.

Technical achievement should be rewarded, but not technical achievement alone. More important criteria for judging the merits of research are the degree to which it actually works and the degree to which it does something that actually matters. Information visualization research must be approached from this more holistic perspective. Those who direct students’ efforts should help them develop this perspective. Those who award prizes for work in the field should use them to motivate research that works and matters. Anything less is failure.

Take care,

October 26th, 2011

Almost every year at VisWeek there is a panel, workshop, or presentation or two that asks the questions, “Is visualization a science?” or “Can we distinguish good visualizations from bad?” I’m frustrated by the fact that we still need to ask these questions. As the field of visualization continues to grow and struggles to mature, we increasingly recognize the ways in which it lacks discipline. We fear that visualization will wander somewhat aimlessly in fits and starts as we search for a more defined sense of what we’re trying to accomplish and better guidelines for doing it well.

This year’s instance of this was a panel of presentations on the topic “Theories of Visualization — Are There Any?” Two members of the panel, Colin Ware and Jarke Van Wijk, both expressed the opinion that visualization is not a distinct science, and should not be, but an interdisciplinary application of many sciences. Visualization, rather than a science in its own right, is more like design and engineering, which builds a bridge between science and the real world, primarily through technologies that help us think and communicate visually to solve real problems.

Given this premise, it necessarily follows that the merits of visualization should be determined by its ability to solve real problems and to do so in the most effective way possible. Can this be done? Why are we still asking this question? Of course it can be done. Testing the outcomes of a particular visualization or visualization system, if it has clear goals, is relatively straightforward. Unfortunately, this is rarely done, which is perhaps why we still feel like we must continue asking if it can be done. Beyond testing outcomes in a specific way (it worked fairly well for these particular subjects for this particular task under these particular conditions), it would be even more helpful if we could test the merits of a visualization in a more generalizable manner, thereby extending findings to a broader range of applications.

I look forward to the day when we all agree that visualization should be informed by good science and should be evaluated by its ability to solve real problems, so we can spend most of our time and energy doing that without hesitation. Yesterday, I attended a well-deserved tribute to George Robertson, one of the great pioneers in the field. George, who recently retired from Microsoft Research, originally coined the term “information visualization.” I was touched when he told us that, after many years of working in various aspects of computer science beginning in the 1960s, he eventually settled on information visualization because he wanted to do something that improved the lives of people. We who work in this field, like George, have a wonderful opportunity to do something that matters, something that makes the world a better place. For this reason, it’s a travesty when time is wasted covering the same old territory over and over, or frittered away on poorly designed research or on research that can’t possibly matter. People throughout the world in organizations of all types are waiting for us to give them the means to see more clearly. Those who see information visualization as a way to open the eyes of the world to greater understanding will do great work, because it matters.

Take care,

August 22nd, 2011

Thomas G. West has known for over 20 years what has since been gradually recognized by other cognitive scientists: dyslexia has an upside. Not only this, but the special abilities of many dyslexics are desperately needed in the world today. What has been traditionally deemed a disability is a different wiring of the brain—one that once worked exceptionally well and might be coming into its own again. West explores the problems and opportunities of dyslexia and the important role of visual thinking in an updated version of his wonderful book In the Mind’s Eye: Creative Visual Thinkers, Gifted Dyslexics, and the Rise of Visual Technologies, Second Edition (2009).

Dyslexia literally means trouble with words. The brains of dyslexics, while not well wired for verbal thinking, are wired for abundant abilities in visual thinking. Three modes of thinking are important to human endeavor in the world today: literacy (thinking in words), numeracy (thinking in numbers), and graphicacy (thinking in images). Since the middle ages, societies have primarily valued literacy and numeracy, giving those who excel in these aptitudes an elite status. Graphicacy, which I’m using to describe visual-spatial thinking, served humans well in preliterate societies and continued to serve them well since for non-literate endeavors, but it was downgraded in value in medieval times as the way of the masses. Literacy and numeracy became the twin pillars of our educational systems, with little or no appreciation for graphicacy. Those who function predominantly as visual thinkers have been pushed to the margins as disabled, base, and dumb. Much of the work that is enabled by literacy and numeracy, however, is now increasingly being done by machines. Tasks that follow a strict set of procedures or involve computation can be done faster and more accurately by computers. West describes this situation in the following excerpts from his book:

For some four hundred or five hundred years we have had our schools teaching basically the skills of the medieval clerk—reading, writing, counting, and memorizing texts. Now it seems that we might be on the verge of a new era, when we will wish to, and be required to, emphasize a very different set of skills—those of a Renaissance man such as Leonardo da Vinci. With such a change, traits that are considered desirable today might very well be obsolete and unwanted tomorrow. In place of the qualities desired in a well-trained clerk, we might, instead, find preferable a habit of innovation in many diverse fields, the perspective of the global generalist rather than the narrowly focused specialist, and an emphasis on visual content and analysis over parallel verbal modes.

If we continue to turn out people who primarily have the skills (and outlook) of the clerk, however well trained, we may increasingly be turning out people who will, like the unskilled laborer of the last century, have less and less to sell in the marketplace. Sometime in the not too distant future machines will be the best clerks. It will be left to humans to maximize what is most valued among human capabilities and what machines cannot do—and increasingly these are likely to involve the insightful and integrative capacities associated with visual modes of thought. (West, pp. 306 and 307)

To the extent that…[expert] systems proliferate and come to be depended upon, to the extent they show themselves to be useful and reliable, these systems might gradually begin to displace and devalue an increasingly significant proportion of the functions and knowledge painfully gained through years of training by many professionals—physicians, engineers, attorneys, administrators, scientists, and others. Indeed, an ironic aspect of these developments is that these systems may be most useful, and then most threatening, mainly in those disciplines that are the most highly systematized. In such cases, evolution might appear, once again, to move backward…

Consequently, we might expect that the more formalized, organized, and consistent the knowledge of a particular discipline, the more easily it could be gradually taken over by machines. Whereas, on the other hand, the less formalized and the more intuitive the knowledge of a particular discipline, the less easily it could be taken over by machines. (West, pp. 109 and 110)

In time…it may be seen that the most useful forms of education are those that focus on the larger perspectives, the larger patterns, within fields and across fields, interspersed by investigations of depth here and there. (West, p. 113)

What computers can’t do well—see the whole, rather than a collection of parts—is a human skill, especially of visual thinkers. What is visual thinking exactly, and how does it relate to pattern recognition?

We may consider “visual thinking” as that form of thought in which images are generated or recalled in the mind and are manipulated, overlaid, translated, associated with other similar forms (as with a metaphor), rotated, increased or reduced in size, distorted, or otherwise transformed gradually from one familiar image into another…”Pattern recognition” has been a major concern in artificial intelligence research and has proved to be quite complex. It has turned out to be one of those things which is very difficult for computers but easy for most human beings…For our purposes, we will consider pattern recognition to be the ability to discern similarities of form among two or more things, whether these be textile designs, facial resemblance of family members, graphs of repeating biological growth cycles, or similarities between historical epochs…From pattern recognition it is but a short step to “problem solving,” since, at least for its more common aspects, problem solving generally involves the recognition of a developing or repeating pattern and the carrying out of actions to obtain desired results based on one’s understanding of this pattern. (West, p. 36)

We who work with information to find and understand the stories that dwell within, especially visual analysts, keenly recognize the importance of these skills. The ability to see how things connect to form complex systems, how they influence one another in a subtle dance of interaction, is imperative if we hope to solve the big problems that plague us today. These big problems, in many respects, have emerged because of our focus on parts while ignoring the whole—the fallout of specialization.

Everyone agrees that we have a problem. Our technological culture is drowning in its own success. Masses of data and information are accumulating everywhere. Up to now, the basic strategy for dealing with these growing masses of information has been long, mind-numbing education and reckless, blinkered specialization. That this strategy has been effective in a great many respects, so far, there can be little debate. The problems we are discussing are a tribute to its ample and abundant success, so far. However, after long success, it is becoming increasingly clear that this strategy may be entering a phase of diminishing return. It has long been recognized that this strategy has always had built-in problems. The more one knows in one’s own, increasingly narrow area, the more one is ignorant in other areas, the more difficult is effective communication between unrelated areas, and the more unlikely it is that the larger whole will be properly perceived or understood. Like the student who reads too much small print, the specialist’s habitual near focus often promotes the myopic perspective that precludes the comprehension of larger, more important patterns. The distant view of the whole is blurred and unclear. If you focus only on a small group of stars at the edge of the Milky Way, you will not perceive the larger structure of the whole galaxy of which the group is one tiny part.

The specialist strategy breeds its own limits. Pieces of the puzzle in separate areas remain far apart, or come together only after decades of specialist resistance, or success in one area leads to great problems in another. Material abundance produces waste-disposal problems; cars and aircraft produce wonderful mobility for many people, but also deplete resources, produce accidental fatalities, and increase pollution; success in vaccination, hygiene, and health care lead to all the problems of great concentrations of human population.

As the specialist strategy continues to be pursued, a sense of the whole is increasingly lost. Many know their areas; few see the whole. Many are expert; few are wise. But the visual thinkers, late bloomers, and creative dyslexics we have been dealing with have often been outsiders or reluctant participants in this specialist culture—especially those who are energetic, and globally minded, who seem always to be interested in everything, unable to settle down to a “serious” (that is, highly specialized) area of study. (West, p. 298)

In collaboration with visual thinking skills, visualization technologies have an important role to play in solving the big problems of today.

With the further development of smaller, cheaper, but more powerful computers having sophisticated visual-projection capabilities, we might expect a new trend to be emerging in which visual proficiencies could play an increasingly important role in areas that have been almost exclusively dominated in the past by those most proficient in verbal-logical-mathematical modes of thought. Increasingly, graphic images are the key. (West, p. 56)

Graphicacy is no longer the sole realm of artists and architects. Graphical skills and technologies are now essential to our survival.

Take care,

|